Smoothed Analysis Homepage

[Introduction]

[Papers]

[Surveys]

[FAQ]

[Acknowledgement]

Introduction

The smoothed analysis of algorithms

was introduced in the paper

Smoothed Analysis of Algorithms:

Why The Simplex Algorithm Usually Takes Polynomial Time,

by

Daniel A. Spielman

and

Shang-Hua Teng.

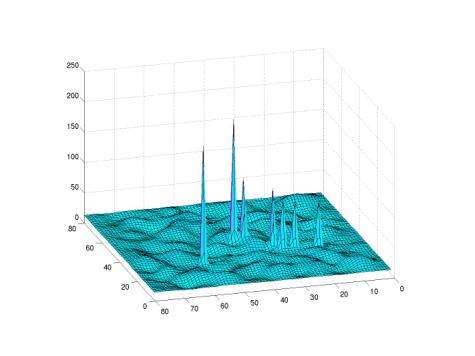

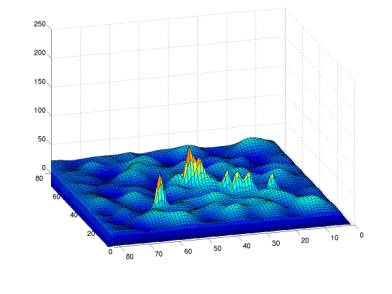

It is an alternative to the

standard worst-case and average-case analyses.

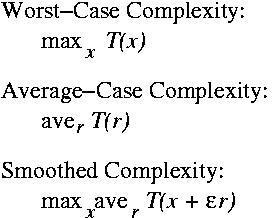

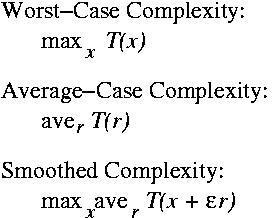

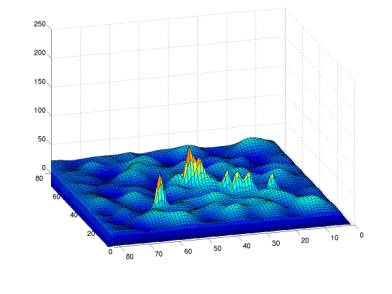

The smoothed complexity of an algorithm is

the maximum over its inputs of the expected running time of

the algorithm under slight perturbations of that input.

Smoothed complexity is measured in terms of the size of the input and

the magnitude of the perturbation.

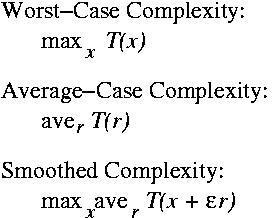

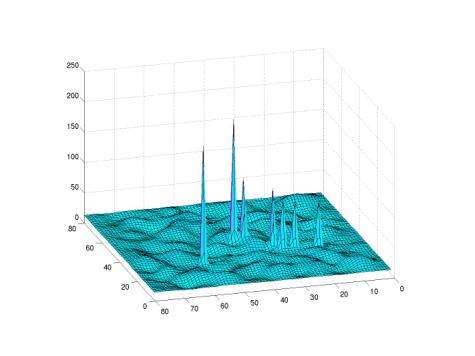

Another way of understanding smoothed complexity is to view

the running time of an algorithm as a function from its inputs

to time (in the figure below, the inputs axes a represented by

the X and Y axes, and time is represented by the Z axis).

Worst-case complexity measures the highest peak in the plot

of the function.

If one uses Gaussian perturbations, then

Smoothed complexity measures the highest peak after the fuction

has been convolved with a Gaussian.

If the highest peaks in the complexity function are relatively isolated

(think numerically unstable) then convolving with a Gaussian

will greatly reduce their height.

If one uses Gaussian perturbations, then

Smoothed complexity measures the highest peak after the fuction

has been convolved with a Gaussian.

If the highest peaks in the complexity function are relatively isolated

(think numerically unstable) then convolving with a Gaussian

will greatly reduce their height.

Daniel A. Spielman

Last modified: Thu Jul 25 00:11:30 EDT 2002