Consolidated lecture notes for CS223 as taught in the Spring 2005 semester at Yale by Jim Aspnes.

Contents

- C/Variables

- Machine memory

- Variables

- Using variables

- C/IntegerTypes

- Integer types

- Integer constants

- Integer operators

- Input and output

- Alignment

- C/Statements

- Simple statements

- Compound statements

- C/AssemblyLanguage

- C/Functions

- Function definitions

- Calling a function

- The return statement

- Function declarations and modules

- Static functions

- Local variables

- Mechanics of function calls

- C/InputOutput

- Character streams

- Reading and writing single characters

- Formatted I/O

- Rolling your own I/O routines

- File I/O

- C/Pointers

- Memory and addresses

- Pointer variables

- The null pointer

- Pointers and functions

- Pointer arithmetic and arrays

- Void pointers

- Run-time storage allocation

- The restrict keyword

- C/DynamicStorageAllocation

- Dynamic storage allocation in C

- Overview

- Allocating space with malloc

- Freeing space with free

- Dynamic arrays using realloc

- Example of building a data structure with malloc

- C/Strings

- String processing in general

- C strings

- String constants

- String buffers

- Operations on strings

- Finding the length of a string

- Comparing strings

- Formatted output to strings

- Dynamic allocation of strings

- argc and argv

- C/Structs

- Structs

- Unions

- Bit fields

- AbstractDataTypes

- Abstraction

- Example of an abstract data type

- Designing abstract data types

- C/Definitions

- Naming types

- Naming constants

- Naming values in sequences

- Other uses of #define

- AsymptoticNotation

- Definitions

- Motivating the definitions

- Proving asymptotic bounds

- Asymptotic notation hints

- Variations in notation

- More information

- LinkedLists

- Stacks and linked lists

- Looping over a linked list

- Looping over a linked list backwards

- Queues

- Deques and doubly-linked lists

- Circular linked lists

- What linked lists are and are not good for

- Further reading

- C/Debugging

- Debugging in general

- Assertions

- gdb

- Valgrind

- Not recommended: debugging output

- TestingDuringDevelopment

- Setting up the test harness

- Stub implementation

- Bounded-space implementation

- First fix

- Final version

- Moral

- Appendix: Test macros

- LinkedLists

- Stacks and linked lists

- Looping over a linked list

- Looping over a linked list backwards

- Queues

- Deques and doubly-linked lists

- Circular linked lists

- What linked lists are and are not good for

- Further reading

- HashTables

- Hashing: basics

- Universal hash families

- FKS hashing with O(s) space and O(1) worst-case search time

- Cuckoo hashing

- Bloom filters

- C/FunctionPointers

- Basics

- Function pointer declarations

- Applications

- Closures

- Objects

- C/PerformanceTuning

- Timing under Linux

- Profiling with gprof

- AlgorithmDesignTechniques

- Basic principles of algorithm design

- Classifying algorithms by structure

- Example: Finding the maximum

- Example: Sorting

- DynamicProgramming

- Memoization

- Dynamic programming

- Dynamic programming: algorithmic perspective

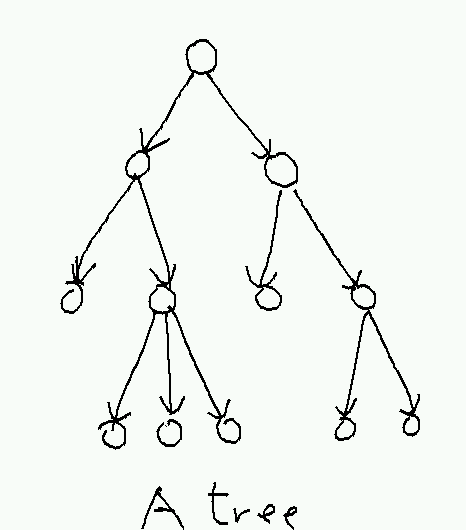

- BinaryTrees

- Tree basics

- Binary tree implementations

- The canonical binary tree algorithm

- Nodes vs leaves

- Special classes of binary trees

- Heaps

- Priority queues

- Expensive implementations of priority queues

- Heaps

- Packed heaps

- Bottom-up heapification

- Heapsort

- More information

- BinarySearchTrees

- Searching for a node

- Inserting a new node

- Costs

- BalancedTrees

- The basics: tree rotations

- AVL trees

- 2–3 trees

- Red-black trees

- B-trees

- Splay trees

- Skip lists

- Implementations

- RadixSearch

- Tries

- Patricia trees

- Ternary search trees

- More information

- C/BitExtraction

- C/Graphs

- Graphs

- Why graphs are useful

- Operations on graphs

- Representations of graphs

- Searching for paths in a graph

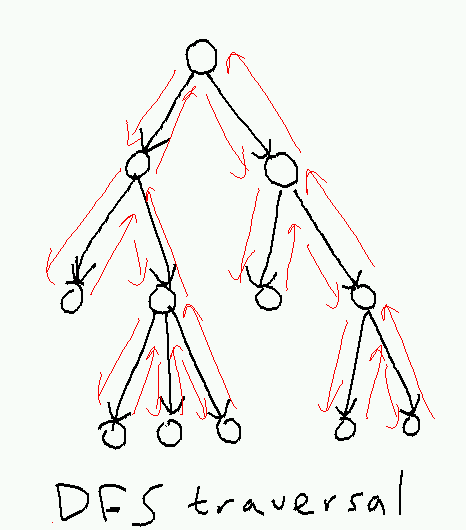

- DepthFirstSearch

- Depth-first search in a tree

- Depth-first search in a directed graph

- DFS forests

- Running time

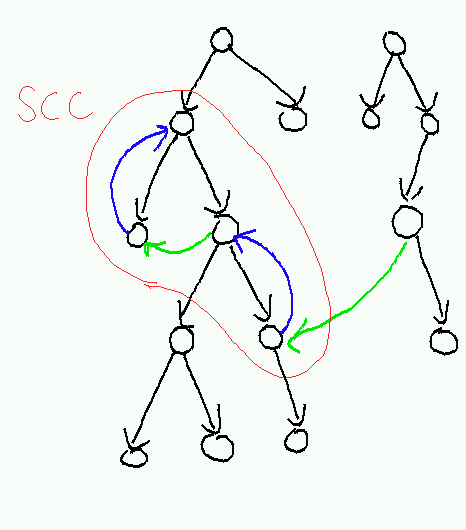

- A more complicated application: strongly-connected components

- BreadthFirstSearch

- ShortestPath

- Single-source shortest paths

- All-pairs shortest paths

- Implementations

- C/FloatingPoint

- Floating point basics

- Floating-point constants

- Operators

- Conversion to and from integer types

- The IEEE-754 floating-point standard

- Error

- Reading and writing floating-point numbers

- Non-finite numbers in C

- The math library

- C/Persistence

- Persistent data

- A simple solution using text files

- Using a binary file

- A version that updates the file in place

- An even better version using mmap

- Concurrency and fault-tolerance issues: ACIDitiy

- C/WhatNext

- What's wrong with C

- What C++ fixes

- Other C-like languages to consider

- Scripting languages

1. C/Variables

2. Machine memory

Basic model: machine memory consists of many bytes of storage, each of which has an address which is itself a sequence of bits. Though the actual memory architecture of a modern computer is complex, from the point of view of a C program we can think of as simply a large address space that the CPU can store things in (and load things from), provided it can supply an address to the memory. Because we don't want to have to type long strings of bits all the time, the C compiler lets us give names to particular regions of the address space, and will even find free space for us to use.

3. Variables

A variable is a name given in a program for some region of memory. Each variable has a type, which tells the compiler how big the region of memory corresponding to it is and how to treat the bits stored in that region when performing various kinds of operations (e.g. integer variables are added together by very different circuitry than floating-point variables, even though both represent numbers as bits). In modern programming languages, a variable also has a scope (a limit on where the name is meaningful, which allows the same name to be used for different variables in different parts of the program) and an extent (the duration of the variable's existence, controlling when the program allocates and deallocates space for it).

3.1. Variable declarations

Before you can use a variable in C, you must declare it. Variable declarations show up in three places:

Outside a function. These declarations declare global variables that are visible throughout the program (i.e. they have global scope). Use of global variables is almost always a mistake.

In the argument list in the header of a function. These variables are parameters to the function. They are only visible inside the function body (local scope), exist only from when the function is called to when the function returns (bounded extent—note that this is different from what happens in some garbage-collected languages like Scheme), and get their initial values from the arguments to the function when it is called.

At the start of any block delimited by curly braces. Such variables are visible only within the block (local scope again) and exist only when the containing function is active (bounded extent). The convention in C is has generally been to declare all such local variables at the top of a function; this is different from the convention in C++ or Java, which encourage variables to be declared when they are first used. This convention may be less strong in C99 code, since C99 adopts the C++ rule of allowing variables to be declared anywhere (which can be particularly useful for index variables in for loops).

Variable declarations consist of a type name followed by one or more variable names separated by commas and terminated by a semicolon (except in argument lists, where each declaration is terminated by a comma). I personally find it easiest to declare variables one per line, to simplify documenting them. It is also possible for global and local variables (but not function arguments) to assign an initial value to a variable by putting in something like = 0 after the variable name. It is good practice to put a comment after each variable declaration that explains what the variable does (with a possible exception for conventionally-named loop variables like i or j in short functions). Below is an example of a program with some variable declarations in it:

1 #include <stdio.h>

2 #include <ctype.h>

3

4 /* This program counts the number of digits in its input. */

5

6 /*

7 *This global variable is not used; it is here only to demonstrate

8 * what a global variable declaration looks like.

9 */

10 unsigned long SpuriousGlobalVariable = 127;

11

12 int

13 main(int argc, char **argv)

14 {

15 int c; /* character read */

16 int count = 0; /* number of digits found */

17

18 while((c = getchar()) != EOF) {

19 if(isdigit(c)) {

20 count++;

21 }

22 }

23

24 printf("%d\n", count);

25

26 return 0;

27 }

3.2. Variable names

The evolution of variable names in different programming languages:

- 11101001001001

- Physical addresses represented as bits.

- #FC27

- Typical assembly language address represented in hexadecimal to save typing (and because it's easier for humans to distinguish #A7 from #B6 than to distinguish 10100111 from 10110110.)

- A1$

- A string variable in BASIC, back in the old days where BASIC variables were one uppercase letter, optionally followed by a number, optionally followed by $ for a string variable and % for an integer variable. These type tags were used because BASIC interpreters didn't have a mechanism for declaring variable types.

- IFNXG7

A typical FORTRAN variable name, back in the days of 6-character all-caps variable names. The I at the start means it's an integer variable. The rest of the letters probably abbreviate some much longer description of what the variable means. The default type based on the first letter was used because FORTRAN programmers were lazy, but it could be overridden by an explicit declaration.

- i, j, c, count, top_of_stack, accumulatedTimeInFlight

Typical names from modern C programs. There is no type information contained in the name; the type is specified in the declaration and remembered by the compiler elsewhere. Note that there are two different conventions for representing multi-word names: the first is to replace spaces with underscores, and the second is to capitalize the first letter of each word (possibly excluding the first letter), a style called "camel case" (CamelCase). You should pick one of these two conventions and stick to it.

- prgcGradeDatabase

An example of Hungarian notation, a style of variable naming in which the type of the variable is encoded in the first few character. The type is now back in the variable name again. This is not enforced by the compiler: even though iNumberOfStudents is supposed to be an int, there is nothing to prevent you from declaring float iNumberOfStudents if you expect to have fractional students for some reason. See http://web.umr.edu/~cpp/common/hungarian.html or HungarianNotation. Not clearly an improvement on standard naming conventions, but it is popular in some programming shops.

In C, variable names are called identifiers.1 An identifier in C must start with a lower or uppercase letter or the underscore character _. Typically variables starting with underscores are used internally by system libraries, so it's dangerous to name your own variables this way. Subsequent characters in an identifier can be letters, digits, or underscores. So for example a, ____a___a_a_11727_a, AlbertEinstein, aAaAaAaAaAAAAAa, and ______ are all legal identifiers in C, but $foo and 01 are not.

The basic principle of variable naming is that a variable name is a substitute for the programmer's memory. It is generally best to give identifiers names that are easy to read and describe what the variable is used for, i.e., that are self-documenting. None of the variable names in the preceding list are any good by this standard. Better names would be total_input_characters, dialedWrongNumber, or stepsRemaining. Non-descriptive single-character names are acceptable for certain conventional uses, such as the use of i and j for loop iteration variables, or c for an input character. Such names should only be used when the scope of the variable is small, so that it's easy to see all the places where it is used at once.

C identifiers are case-sensitive, so aardvark, AArDvARK, and AARDVARK are all different variables. Because it is hard to remember how you capitalized something before, it is important to pick a standard convention and stick to it. The traditional convention in C goes like this:

Ordinary variables and functions are lowercased or camel-cased, e.g. count, countOfInputBits.

User-defined types (and in some conventions global variables) are capitalized, e.g. Stack, TotalBytesAllocated.

Constants created with #define or enum are put in all-caps: MAXIMUM_STACK_SIZE, BUFFER_LIMIT.

4. Using variables

Ignoring pointers (C/Pointers) for the moment, there are essentially two things you can do to a variable: you can assign a value to it using the = operator, as in:

or you can use its value in an expression:

1 x = y+1; /* assign y+1 to x */

The assignment operator is an ordinary operator, and assignment expressions can be used in larger expressions:

x = (y=2)*3; /* sets y to 2 and x to 6 */

This feature is usually only used in certain standard idioms, since it's confusing otherwise.

There are also shorthand operators for expressions of the form variable = variable operator expression. For example, writing x += y is equivalent to writing x = x + y, x /= y is the same as x = x / y, etc.

For the special case of adding or subtracting 1, you can abbreviate still further with the ++ and -- operators. These come in two versions, depending on whether you want the result of the expression (if used in a larger expression) to be the value of the variable before or after the variable is incremented:

The intuition is that if the ++ comes before the variable, the increment happens before the value of the variable is read (a preincrement; if it comes after, it happens after the value is read (a postincrement). This is confusing enough that it is best not to use the value of preincrement or postincrement operations except in certain standard idioms. But using x++ by itself as a substitute for x = x+1 is perfectly acceptable style.

5. C/IntegerTypes

6. Integer types

In order to declare a variable, you have to specify a type, which controls both how much space the variable takes up and how the bits stored within it are interpreted in arithmetic operators.

The standard C integer types are:2

Name |

Typical size |

Signed by default? |

char |

8 bits |

Unspecified |

short |

16 bits |

Yes |

int |

32 bits |

Yes |

long |

32 bits |

Yes |

long long |

64 bits |

Yes |

The typical size is for 32-bit architectures like the Intel i386. Some 64-bit machines might have 64-bit ints and longs, and some prehistoric computers had 16-bit ints. Particularly bizarre architectures might have even wilder bit sizes, but you are not likely to see this unless you program vintage 1970s supercomputers. Some compilers also support a long long type that is usually twice the length of a long (e.g. 64 bits on i386 machines); this may or may not be available if you insist on following the ANSI specification strictly. The general convention is that int is the most convenient size for whatever computer you are using and should be used by default.

Whether a variable is signed or not controls how its values are interpreted. In signed integers, the first bit is the sign bit and the rest are the value in 2's complement notation; so for example a signed char with bit pattern 11111111 would be interpreted as the numerical value -1 while an unsigned char with the same bit pattern would be 255. Most integer types are signed unless otherwise specified; an n-bit integer type has a range from -2n-1 to 2n-1-1 (e.g. -32768 to 32767 for a short.) Unsigned variables, which can be declared by putting the keyword unsigned before the type, have a range from 0 to 2n-1 (e.g. 0 to 65535 for an unsigned short).

For chars, whether the character is signed (-128..127) or unsigned (0..255) is at the whim of the compiler. If it matters, declare your variables as signed char or unsigned char. For storing actual characters that you aren't doing arithmetic on, it shouldn't matter.

6.1. C99 fixed-width types

C99 provides a stdint.h header file that defines integer types with known size independent of the machine architecture. So in C99, you can use int8_t instead of signed char to guarantee a signed type that holds exactly 8 bits, or uint64_t instead of unsigned long long to get a 64-bit unsigned integer type. The full set of types typically defined are int8_t, int16_t, int32_t, and int64_t for signed integers and the same starting with uint for signed integers. There are also types for integers that contain the fewest number of bits greater than some minimum (e.g., int_least16_t is a signed type with at least 16 bits, chosen to minimize space) or that are the fastest type with at least the given number of bits (e.g., int_fast16_t is a signed type with at least 16 bits, chosen to minimize time).

These are all defined using typedef; the main advantage of using stdint.h over defining them yourself is that if somebody ports your code to a new architecture, stdint.h should take care of choosing the right types automatically. The disadvantage is that, like many C99 features, stdint.h is not universally available on all C compilers.

If you need to print types defined in stdint.h, the larger inttypes.h header defines macros that give the corresponding format strings for printf.

7. Integer constants

Constant integer values in C can be written in any of four different ways:

In the usual decimal notation, e.g. 0, 1, -127, 9919291, 97.

In octal or base 8, when the leading digit is 0, e.g. 01 for 1, 010 for 8, 0777 for 511, 0141 for 97.

In hexadecimal or base 16, when prefixed with 0x. The letters a through f are used for the digits 10 through 15. For example, 0x61 is another way to write 97.

Using a character constant, which is a single ASCII character or an escape sequence inside single quotes. The value is the ASCII value of the character: 'a' is 97.3 Unlike languages with separate character types, C characters are identical to integers; you can (but shouldn't) calculate 972 by writing 'a'*'a'. You can also store a character anywhere.

Except for character constants, you can insist that an integer constant is unsigned or long by putting a u or l after it. So 1ul is an unsigned long version of 1. By default integer constants are (signed) ints. For long long constants, use ll, e.g., the unsigned long long constant 0xdeadbeef01234567ull. It is also permitted to write the l as L, which can be less confusing if the l looks too much like a 1.

8. Integer operators

8.1. Arithmetic operators

The usual + (addition), - (negation or subtraction), and * (multiplication) operators work on integers pretty much the way you'd expect. The only caveat is that if the result lies outside of the range of whatever variable you are storing it in, it will be truncated instead of causing an error:

This can be a source of subtle bugs if you aren't careful. The usual giveaway is that values you thought should be large positive integers come back as random-looking negative integers.

Division (/) of two integers also truncates: 2/3 is 0, 5/3 is 1, etc. For positive integers it will always round down.

Prior to C99, if either the numerator or denominator is negative, the behavior was unpredictable and depended on what your processor does---in practice this meant you should never use / if one or both arguments might be negative. The C99 standard specified that integer division always removes the fractional part, effectively rounding toward 0; so (-3)/2 is -1, 3/-2 is -1, and (-3)/-2 is 1.

There is also a remainder operator % with e.g. 2%3 = 2, 5%3 = 2, 27 % 2 = 1, etc. The sign of the modulus is ignored, so 2%-3 is also 2. The sign of the dividend carries over to the remainder: (-3)%2 and (-3)%(-2) are both 1. The reason for this rule is that it guarantees that y == x*(y/x) + y%x is always true.

8.2. Bitwise operators

In addition to the arithmetic operators, integer types support bitwise logical operators that apply some Boolean operation to all the bits of their arguments in parallel. What this means is that the i-th bit of the output is equal to some operation applied to the i-th bit(s) of the input(s). The bitwise logical operators are ~ (bitwise negation: used with one argument as in ~0 for the all-1's binary value), & (bitwise AND), '|' (bitwise OR), and '^' (bitwise XOR, i.e. sum mod 2). These are mostly used for manipulating individual bits or small groups of bits inside larger words, as in the expression x & 0x0f, which strips off the bottom four bits stored in x.

Examples:

x |

y |

expression |

value |

0011 |

0101 |

x&y |

0001 |

0011 |

0101 |

x|y |

0111 |

0011 |

0101 |

x^y |

0101 |

0011 |

0101 |

~x |

1100 |

The shift operators << and >> shift the bit sequence left or right: x << y produces the value x⋅2y (ignoring overflow); this is equivalent to shifting every bit in x y positions to the left and filling in y zeros for the missing positions. In the other direction, x >> y produces the value ⌊x⋅2-y⌋, by shifting x y positions to the right. The behavior of the right shift operator depends on whether x is unsigned or signed; for unsigned values, it shifts in zeros from the left end always; for signed values, it shifts in additional copies of the leftmost bit (the sign bit). This makes x >> y have the same sign as x if x is signed.

If y is negative, it reverses the direction of the shift; so x << -2 is equivalent to x >> 2.

Examples (unsigned char x):

x |

y |

x << y |

x >> y |

00000001 |

1 |

00000010 |

00000000 |

11111111 |

3 |

11111000 |

00011111 |

10111001 |

-2 |

00101110 |

11100100 |

Examples (signed char x):

x |

y |

x << y |

x >> y |

00000001 |

1 |

00000010 |

00000000 |

11111111 |

3 |

11111000 |

11111111 |

10111001 |

-2 |

11101110 |

11100100 |

Shift operators are often used with bitwise logical operators to set or extract individual bits in an integer value. The trick is that (1 << i) contains a 1 in the i-th least significant bit and zeros everywhere else. So x & (1<<i) is nonzero if and only if x has a 1 in the i-th place. This can be used to print out an integer in binary format (which standard printf won't do):

(See test_print_binary.c for a program that uses this.)

In the other direction, we can set the i-th bit of x to 1 by doing x | (1 << i) or to 0 by doing x & ~(1 << i). See C/BitExtraction for applications of this to build arbitrarily-large bit vectors.

8.3. Logical operators

To add to the confusion, there are also three logical operators that work on the truth-values of integers, where 0 is defined to be false and anything else is defined by be true. These are && (logical AND), ||, (logical OR), and ! (logical NOT). The result of any of these operators is always 0 or 1 (so !!x, for example, is 0 if x is 0 and 1 if x is anything else). The && and || operators evaluate their arguments left-to-right and ignore the second argument if the first determines the answer (this is the only place in C where argument evaluation order is specified); so

is in a very weak sense perfectly safe code to run.

Watch out for confusing & with &&. The expression 1 & 2 evaluates to 0, but 1 && 2 evaluates to 1. The statement 0 & execute_programmer(); is also unlikely to do what you want.

Yet another logical operator is the ternary operator ?:, where x ? y : z equals the value of y if x is nonzero and z if x is zero. Like && and ||, it only evaluates the arguments it needs to:

1 fileExists(badFile) ? deleteFile(badFile) : createFile(badFile);

Most uses of ?: are better done using an if-then-else statement (C/Statements).

8.4. Relational operators

Logical operators usually operate on the results of relational operators or comparisons: these are == (equality), != (inequality), < (less than), > (greater than), <= (less than or equal to) and >= (greater than or equal to). So, for example,

if(size >= MIN_SIZE && size <= MAX_SIZE) {

puts("just right");

}tests if size is in the (inclusive) range [MIN_SIZE..MAX_SIZE].

Beware of confusing == with =. The code

is perfectly legal C, and will set x to 5 rather than testing if it's equal to 5. Because 5 happens to be nonzero, the body of the if statement will always be executed. This error is so common and so dangerous that gcc will warn you about any tests that look like this if you use the -Wall option. Some programmers will go so far as to write the test as 5 == x just so that if their finger slips, they will get a syntax error on 5 = x even without special compiler support.

9. Input and output

To input or output integer values, you will need to convert them from or to strings. Converting from a string is easy using the atoi or atol functions declared in stdlib.h; these take a string as an argument and return an int or long, respectively.4

Output is usually done using printf (or sprintf if you want to write to a string without producing output). Use the %d format specifier for ints, shorts, and chars that you want the numeric value of, %ld for longs, and %lld for long longs.

A contrived program that uses all of these features is given below:

1 #include <stdio.h>

2 #include <stdlib.h>

3

4 /* This program can be used to how atoi etc. handle overflow. */

5 /* For example, try "overflow 1000000000000". */

6 int

7 main(int argc, char **argv)

8 {

9 char c;

10 int i;

11 long l;

12 long long ll;

13

14 if(argc != 2) {

15 fprintf(stderr, "Usage: %s n\n", argv[0]);

16 return 1;

17 }

18

19 c = atoi(argv[1]);

20 i = atoi(argv[1]);

21 l = atol(argv[1]);

22 ll = atoll(argv[1]);

23

24 printf("char: %d int: %d long: %ld long long: %lld", c, i, l, ll);

25

26 return 0;

27 }

10. Alignment

Modern CPU architectures typically enforce alignment restrictions on multi-byte values, which mean that the address of an int or long typically has to be a multiple of 4. This is an effect of the memory being organized as groups of 32 bits that are written in parallel instead of 8 bits. Such restrictions are not obvious when working with integer-valued variables directly, but will come up when we talk about pointers in C/Pointers.

11. C/Statements

Contents

The bodies of C functions (including the main function) are made up of statements. These can either be simple statements that do not contain other statements, or compound statements that have other statements inside them. Control structures are compound statements like if/then/else, while, for, and do..while that control how or whether their component statements are executed.

12. Simple statements

The simplest kind of statement in C is an expression (followed by a semicolon, the terminator for all simple statements). Its value is computed and discarded. Examples:

Most statements in a typical C program are simple statements of this form.

Other examples of simple statements are the jump statements return, break, continue, and goto. A return statement specifies the return value for a function (if there is one), and when executed it causes the function to exit immediately. The break and continue statements jump immediately to the end of a loop (or switch; see below) or the next iteration of a loop; we'll talk about these more when we talk about loops. The goto statement jumps to another location in the same function, and exists for the rare occasions when it is needed. Using it in most circumstances is a sin.

13. Compound statements

Compound statements come in two varieties: conditionals and loops.

13.1. Conditionals

These are compound statements that test some condition and execute one or another block depending on the outcome of the condition. The simplest is the if statement:

The body of the if statement is executed only if the expression in parentheses at the top evaluates to true (which in C means any value that is not 0).

The braces are not strictly required, and are used only to group one or more statements into a single statement. If there is only one statement in the body, the braces can be omitted:

1 if(programmerIsLazy) omitBraces();

This style is recommended only for very simple bodies. Omitting the braces makes it harder to add more statements later without errors.

In the example above, the lack of braces means that the hideInBunker() statement is not part of the if statement, despite the misleading indentation. This sort of thing is why I generally always put in braces in an if.

An if statement may have an else clause, whose body is executed if the test is false (i.e. equal to 0).

A common idiom is to have a chain of if and else if branches that test several conditions:

This can be inefficient if there are a lot of cases, since the tests are applied sequentially. For tests of the form <expression> == <small constant>, the switch statement may provide a faster alternative. Here's a typical switch statement:

This prints the string "cow" if there is one cow, "cowen" if there are two cowen, and "cows" if there are any other number of cows. The switch statement evaluates its argument and jumps to the matching case label, or to the default label if none of the cases match. Cases must be constant integer values.

The break statements inside the block jump to the end of the block. Without them, executing the switch with numberOfCows equal to 1 would print all three lines. This can be useful in some circumstances where the same code should be used for more than one case:

or when a case "falls through" to the next:

Note that it is customary to include a break on the last case even though it has no effect; this avoids problems later if a new case is added. It is also customary to include a default case even if the other cases supposedly exhaust all the possible values, as a check against bad or unanticipated inputs.

Though switch statements are better than deeply nested if/else-if constructions, it is often even better to organize the different cases as data rather than code. We'll see examples of this when we talk about function pointers C/FunctionPointers.

Nothing in the C standards prevents the case labels from being buried inside other compound statements. One rather hideous application of this fact is Duff's device.

13.2. Loops

There are three kinds of loops in C.

13.2.1. The while loop

A while loop tests if a condition is true, and if so, executes its body. It then tests the condition is true again, and keeps executing the body as long as it is. Here's a program that deletes every occurence of the letter e from its input.

Note that the expression inside the while argument both assigns the return value of getchar to c and tests to see if it is equal to EOF (which is returned when no more input characters are available). This is a very common idiom in C programs. Note also that even though c holds a single character, it is declared as an int. The reason is that EOF (a constant defined in stdio.h) is outside the normal character range, and if you assign it to a variable of type char it will be quietly truncated into something else. Because C doesn't provide any sort of exception mechanism for signalling unusual outcomes of function calls, designers of library functions often have to resort to extending the output of a function to include an extra value or two to signal failure; we'll see this a lot when the null pointer shows up in C/Pointers.

13.2.2. The do..while loop

The do..while statement is like the while statement except the test is done at the end of the loop instead of the beginning. This means that the body of the loop is always executed at least once.

Here's a loop that does a random walk until it gets back to 0 (if ever). If we changed the do..while loop to a while loop, it would never take the first step, because pos starts at 0.

1 #include <stdio.h>

2 #include <stdlib.h>

3 #include <time.h>

4

5 int

6 main(int argc, char **argv)

7 {

8 int pos = 0; /* position of random walk */

9

10 srandom(time(0)); /* initialize random number generator */

11

12 do {

13 pos += random() & 0x1 ? +1 : -1;

14 printf("%d\n", pos);

15 } while(pos != 0);

16

17 return 0;

18 }

The do..while loop is used much less often in practice than the while loop. Note that it is always possible to convert a do..while loop to a while loop by making an extra copy of the body in front of the loop.

13.2.3. The for loop

The for loop is a form of SyntacticSugar that is used when a loop iterates over a sequence of values stored in some variable (or variables). Its argument consists of three expressions: the first initializes the variable and is called once when the statement is first reached. The second is the test to see if the body of the loop should be executed; it has the same function as the test in a while loop. The third sets the variable to its next value. Some examples:

1 /* count from 0 to 9 */

2 for(i = 0; i < 10; i++) {

3 printf("%d\n", i);

4 }

5

6 /* and back from 10 to 0 */

7 for(i = 10; i >= 0; i--) {

8 printf("%d\n", i);

9 }

10

11 /* this loop uses some functions to move around */

12 for(c = firstCustomer(); c != END_OF_CUSTOMERS; c = customerAfter(c)) {

13 helpCustomer(c);

14 }

15

16 /* this loop prints powers of 2 that are less than n*/

17 for(i = 1; i < n; i *= 2) {

18 printf("%d\n", i);

19 }

20

21 /* this loop does the same thing with two variables by using the comma operator */

22 for(i = 0, power = 1; power < n; i++, power *= 2) {

23 printf("2^%d = %d\n", i, power);

24 }

25

26 /* Here are some nested loops that print a times table */

27 for(i = 0; i < n; i++) {

28 for(j = 0; j < n; j++) {

29 printf("%d*%d=%d ", i, j, i*j);

30 }

31 putchar('\n');

32 }

A for loop can always be rewritten as a while loop.

13.2.4. Loops with break, continue, and goto

The break statement immediately exits the innermmost enclosing loop or switch statement.

The continue statement skips to the next iteration. Here is a program with a loop that iterates through all the integers from -10 through 10, skipping 0:

Occasionally, one would like to break out of more than one nested loop. The way to do this is with a goto statement.

The target for the goto is a label, which is just an identifier followed by a colon and a statement (the empty statement ; is ok).

The goto statement can be used to jump anywhere within the same function body, but breaking out of nested loops is widely considered to be its only genuinely acceptable use in normal code.

13.3. Choosing where to put a loop exit

Choosing where to put a loop exit is usually pretty obvious: you want it after any code that you want to execute at least once, and before any code that you want to execute only if the termination test fails.

If you know in advance what values you are going to be iterating over, you will most likely be using a for loop:

Most of the rest of the time, you will want a while loop:

The do..while loop comes up mostly when you want to try something, then try again if it failed:

Finally, leaving a loop in the middle using break can be handy if you have something extra to do before trying again:

(Note the empty for loop header means to loop forever; while(1) also works.)

14. C/AssemblyLanguage

C is generally compiled to assembly language first, and then an assembler compiles the assembly language to actual machine code. Typically the intermediate assembly language code is deleted.

If you are very curious about what the compiler does to your code, you can run gcc with the -S option to tell it to stop after creating the assembly file; for example, the command gcc -S program.c will create a file program.s containing the compiled code. What this code will look like depends both on what your C code looks like and what other options you give the C compiler. You can learn how to read (or write) assembly for the Intel i386 architecture by following this link (but see these notes for an explanation of the variant of i386 assembly language supported by gas, the GNU assembler used by gcc), or you can just jump in and guess what is going on by trying to expand the keywords (hint: movl stands for "move long," addl for "add long," etc., numbers preceded by dollar signs are constants, and things like %ebx are the names of registers, high-speed memory locations built in to the CPU).

For example, here's a short C program that implements a simple loop in two different ways, the first using the standard for loop construct and the second building a for loop by hand using goto (don't do this).

1 #include <stdio.h>

2

3 int

4 main(int argc, char **argv)

5 {

6 int i;

7

8 /* normal loop */

9 for(i = 0; i < 10; i++) {

10 printf("%d\n", i);

11 }

12

13 /* non-standard implementation using if and goto */

14 i = 0;

15 start:

16 if(i < 10) {

17 printf("%d\n", i);

18 i++;

19 goto start;

20 }

21

22 return 0;

23 }

and here is the output of the compiler using gcc -S loops.c:

.file "loops.c"

.section .rodata

.LC0:

.string "%d\n"

.text

.globl main

.type main, @function

main:

pushl %ebp

movl %esp, %ebp

subl $24, %esp

andl $-16, %esp

movl $0, %eax

subl %eax, %esp

movl $0, -4(%ebp)

.L2:

cmpl $9, -4(%ebp)

jle .L5

jmp .L3

.L5:

movl -4(%ebp), %eax

movl %eax, 4(%esp)

movl $.LC0, (%esp)

call printf

leal -4(%ebp), %eax

incl (%eax)

jmp .L2

.L3:

movl $0, -4(%ebp)

.L6:

cmpl $9, -4(%ebp)

jg .L7

movl -4(%ebp), %eax

movl %eax, 4(%esp)

movl $.LC0, (%esp)

call printf

leal -4(%ebp), %eax

incl (%eax)

jmp .L6

.L7:

movl $0, %eax

leave

ret

.size main, .-main

.section .note.GNU-stack,"",@progbits

.ident "GCC: (GNU) 3.3.4 (Debian)"Note that even though the two loops are doing the same thing, the structure is different. The first uses jle (jump if less than or equal to) to jump over the jmp that skips the body of the loop if it shouldn't be executed, while the second uses jg (jump if greater than) to skip it directly, which is closer to what the C code says to do. This is not unusual; for built-in control structures the compiler will often build odd-looking implementations that still work, for subtle and mysterious reasons of its own.

We can make them even more different by turning on the optimizer, e.g. with gcc -S -O3 -funroll-loops loops.c:

.file "loops.c"

.section .rodata.str1.1,"aMS",@progbits,1

.LC0:

.string "%d\n"

.text

.p2align 4,,15

.globl main

.type main, @function

main:

pushl %ebp

xorl %ecx, %ecx

movl %esp, %ebp

pushl %ebx

subl $20, %esp

movl $2, %ebx

andl $-16, %esp

movl %ecx, 4(%esp)

movl $.LC0, (%esp)

call printf

movl $.LC0, (%esp)

movl $1, %edx

movl %edx, 4(%esp)

call printf

movl %ebx, 4(%esp)

movl $5, %ebx

movl $.LC0, (%esp)

call printf

movl $.LC0, (%esp)

movl $3, %ecx

movl %ecx, 4(%esp)

call printf

movl $.LC0, (%esp)

movl $4, %edx

movl %edx, 4(%esp)

call printf

movl %ebx, 4(%esp)

xorl %ebx, %ebx

movl $.LC0, (%esp)

call printf

movl $.LC0, (%esp)

movl $6, %ecx

movl %ecx, 4(%esp)

call printf

movl $.LC0, (%esp)

movl $7, %edx

movl %edx, 4(%esp)

call printf

movl $.LC0, (%esp)

movl $8, %eax

movl %eax, 4(%esp)

call printf

movl $.LC0, (%esp)

movl $9, %edx

movl %edx, 4(%esp)

.p2align 4,,15

.L31:

call printf

.L7:

cmpl $9, %ebx

jg .L8

movl %ebx, 4(%esp)

incl %ebx

movl $.LC0, (%esp)

jmp .L31

.L8:

movl -4(%ebp), %ebx

xorl %eax, %eax

leave

ret

.size main, .-main

.section .note.GNU-stack,"",@progbits

.ident "GCC: (GNU) 3.3.4 (Debian)"Now the first loop is gone, replaced by ten separate calls to printf. Some of the arguments are loaded up in odd places; for example, 2 and 5 are stored in their registers well before they are used. Again, the compiler moves in mysterious ways, but guarantees that the resulting code will do what you asked. The second loop is left pretty much the same as it was, since the C compiler doesn't have enough information to recognize it as a loop.

The moral of the story: if you write your code using standard idioms, it's more likely that the C compiler will understand what you want and be able to speed it up for you. The odds are that using a non-standard idiom will cost you more in time by confusing the compiler than it will gain you in slight code improvements.

15. C/Functions

A function, procedure, or subroutine encapsulates some complex computation as a single operation. Typically, when we call a function, we pass as arguments all the information this function needs, and any effect it has will be reflected in either its return value or (in some cases) in changes to values pointed to by the arguments. Inside the function, the arguments are copied into local variables, which can be used just like any other local variable---they can even be assigned to without affecting the original argument.

16. Function definitions

A typical function definition looks like this:

The part outside the braces is called the function declaration; the braces and their contents is the function body.

Like most complex declarations in C, once you delete the type names the declaration looks like how the function is used: the name of the function comes before the parentheses and the arguments inside. The ints scattered about specify the type of the return value of the function (Line 3) and of the parameters (Line 4); these are used by the compiler to determine how to pass values in and out of the function and (usually for more complex types, since numerical types will often convert automatically) to detect type mismatches.

If you want to define a function that doesn't return anything, declare its return type as void. You should also declare a parameter list of void if the function takes no arguments.

It is not strictly speaking an error to omit the second void here. Putting void in for the parameters tells the compiler to enforce that no arguments are passed in. If we had instead declared helloWorld as

it would be possible to call it as

1 helloWorld("this is a bogus argument");

without causing an error. The reason is that a function declaration with no arguments means that the function can take an unspecified number of arguments, and it's up to the user to make sure they pass in the right ones. There are good historical reasons for what may seem like obvious lack of sense in the design of the language here, and fixing this bug would break most C code written before 1989. But you shouldn't ever write a function declaration with an empty argument list, since you want the compiler to know when something goes wrong.

17. Calling a function

A function call consists of the function followed by its arguments (if any) inside parentheses, separated by comments. For a function with no arguments, call it with nothing between the parentheses. A function call that returns a value can be used in an expression just like a variable. A call to a void function can only be used as an expression by itself:

18. The return statement

To return a value from a function, write a return statement, e.g.

1 return 172;

The argument to return can be any expression. Unlike the expression in, say, an if statement, you do not need to wrap it in parentheses. If a function is declared void, you can do a return with no expression, or just let control reach the end of the function.

Executing a return statement immediately terminates the function. This can be used like break to get out of loops early.

19. Function declarations and modules

By default, functions have global scope: they can be used anywhere in your program, even in other files. If a file doesn't contain a declaration for a function someFunc before it is used, the compiler will assume that it is declared like int someFunc() (i.e., return type int and unknown arguments). This can produce infuriating complaints later when the compiler hits the real declaration and insists that your function someFunc should be returning an int and you are a bonehead for declaring it otherwise.

The solution to such insulting compiler behavior errors is to either (a) move the function declaration before any functions that use it; or (b) put in a declaration without a body before any functions that use it, in addition to the declaration that appears in the function definition. (Note that this violates the no separate but equal rule, but the compiler should tell you when you make a mistake.) Option (b) is generally preferred, and is the only option when the function is used in a different file.

To make sure that all declarations of a function are consistent, the usual practice is to put them in an include file. For example, if distSquared is used in a lot of places, we might put it in its own file distSquared.c:

This file uses #include to include a copy of this file, distSquared.h:

Note that the declaration in distSquared.h doesn't have a body; instead, it's terminated by a semicolon like a variable declaration. It's also worth noting that we moved the documenting comment to distSquared.h: the idea is that distSquared.h is the public face of this (very small one-function) module, and so the explanation of how to use the function should be there.

The reason distSquared.c includes distSquared.h is to get the compiler to verify that the declarations in the two files match. But to use the distSquared function, we also put #include "distSquared.h" at the top of the file that uses it:

The #include on line 1 uses double quotes instead of angle brackets; this tells the compiler to look for distSquared.h in the current directory instead of the system include directory (typically /usr/include).

20. Static functions

By default, all functions are global; they can be used in any file of your program whether or not a declaration appears in a header file. To restrict access to the current file, declare a function static, like this:

The function hello will be visible everywhere. The function helloHelper will only be visible in the current file.

It's generally good practice to declare a function static unless you intend to make it available, since not doing so can cause namespace conflicts, where the presence of two functions with the same name either prevent the program from linking or---even worse---cause the wrong function to be called. The latter can happen with library functions, since C allows the programmer to override library functions by defining a new function with the same name. I once had a program fail in a spectacularly incomprehensible way because I'd written a select function without realizing that select is a core library function in C.

21. Local variables

A function may contain definitions of local variables, which are visible only inside the function and which survive only until the function returns. These may be declared at the start of any block (group of statements enclosed by braces), but it is conventional to declare all of them at the outermost block of the function.

22. Mechanics of function calls

Several things happen under the hood when a function is called. Since a function can be called from several different places, the CPU needs to store its previous state to know where to go back. It also needs to allocate space for function arguments and local variables.

Some of this information will be stored in registers, memory locations built into the CPU itself, but most will go on the stack, a region of memory that on typical machines grows downward, even though the most recent additions to the stack are called the "top" of the stack. The location of the top of the stack is stored in the CPU in a special register called the stack pointer.

So a typical function call looks like this internally:

The current instruction pointer or program counter value, which gives the address of the next line of machine code to be executed, is pushed onto the stack.

- Any arguments to the function are copied either into specially designated registers or onto new locations on the stack. The exact rules for how to do this vary from one CPU architecture to the next, but a typical convention might be that the first four arguments or so are copied into registers and the rest (if any) go on the stack.

- The instruction pointer is set to the first instruction in the code for the function.

- The function allocates additional space on the stack to hold its local variables (if any) and to save copies of the values of any registers it wants to use (so that it can restore their contents before returning to its caller).

The function body is executed until it hits a return statement.

- Returning from the function is the reverse of invoking it: any saved registers are restored from the stack, the return value is copied to a standard register, and the values of the instruction pointer and stack pointer are restored to what they were before the function call.

From the programmer's perspective, the important point is that both the arguments and the local variables inside a function are stored in freshly-allocated locations that are thrown away after the function exits. So after a function call the state of the CPU is restored to its previous state, except for the return value. Any arguments that are passed to a function are passed as copies, so changing the values of the function arguments inside the function has no effect on the caller. Any information stored in local variables is lost.

Under rare circumstances, it may be useful to have a variable local to a function that persists from one function call to the next. You can do so by declaring the variable static. For example, here is a function that counts how many times it has been called:

Static local variables are stored outside the stack with global variables, and have unbounded extent. But they are only visible inside the function that declares them. This makes them slightly less dangerous than global variables---there is no fear that some foolish bit of code elsewhere will quietly change their value---but it is still the case that they usually aren't what you want. It is also likely that operations on static variables will be slightly slower than operations on ordinary ("automatic") variables, since making them persistent means that they have to be stored in (slow) main memory instead of (fast) registers.

23. C/InputOutput

Input and output from C programs is typically done through the standard I/O library, whose functions etc. are declared in stdio.h. A detailed descriptions of the functions in this library is given in Appendix B of KernighanRitchie. We'll talk about some of the more useful functions and about how input-output (I/O) works on Unix-like operating systems in general.

24. Character streams

The standard I/O library works on character streams, objects that act like long sequences of incoming or outgoing characters. What a stream is connected to is often not apparent to a program that uses it; an output stream might go to a terminal, to a file, or even to another program (appearing there as an input stream).

Three standard streams are available to all programs: these are stdin (standard input), stdout (standard output), and stderr (standard error). Standard I/O functions that do not take a stream as an argument will generally either read from stdin or write to stdout. The stderr stream is used for error messages. It is kept separate from stdout so that you can see these messages even if you redirect output to a file:

$ ls no-such-file > /tmp/dummy-output ls: no-such-file: No such file or directory

25. Reading and writing single characters

To read a single character from stdin, use getchar:

The getchar routine will return the special value EOF (usually -1; short for end of file) if there are no more characters to read, which can happen when you hit the end of a file or when the user types the end-of-file key control-D to the terminal. Note that the return value of getchar is declared to be an int since EOF lies outside the normal character range.

To write a single character to stdout, use putchar:

1 putchar('!');

Even though putchar can only write single bytes, it takes an int as an argument. Any value outside the range 0..255 will be truncated to its last byte, as in the usual conversion from int to unsigned char.

Both getchar and putchar are wrappers for more general routines getc and putc that allow you to specify which stream you are using. To illustrate getc and putc, here's how we might define getchar and putchar if they didn't exist already:

Note that putc, putchar2 as defined above, and the original putchar all return an int rather than void; this is so that they can signal whether the write succeeded. If the write succeeded, putchar or putc will return the value written. If the write failed (say because the disk was full), then putc or putchar will return EOF.

Here's another example of using putc to make a new function putcerr that writes a character to stderr:

A rather odd feature of the C standard I/O library is that if you don't like the character you just got, you can put it back using the ungetc function. The limitations on ungetc are that (a) you can only push one character back, and (b) that character can't be EOF. The ungetc function is provided because it makes certain high-level input tasks easier; for example, if you want to parse a number written as a sequence of digits, you need to be able to read characters until you hit the first non-digit. But if the non-digit is going to be used elsewhere in your program, you don't want to eat it. The solution is to put it back using ungetc.

Here's a function that uses ungetc to peek at the next character on stdin without consuming it:

26. Formatted I/O

Reading and writing data one character at a time can be painful. The C standard I/O library provides several convenient routines for reading and writing formatted data. The most commonly used one is printf, which takes as arguments a format string followed by zero or more values that are filled in to the format string according to patterns appearing in it.

Here are some typical printf statements:

1 printf("Hello\n"); /* print "Hello" followed by a newline */

2 printf("%c", c); /* equivalent to putchar(c) */

3 printf("%d", n); /* print n (an int) formatted in decimal */

4 printf("%u", n); /* print n (an unsigned int) formatted in decimal */

5 printf("%o", n); /* print n (an unsigned int) formatted in octal */

6 printf("%x", n); /* print n (an unsigned int) formatted in hexadecimal */

7 printf("%f", x); /* print x (a float or double) */

8

9 /* print total (an int) and average (a double) on two lines with labels */

10 printf("Total: %d\nAverage: %f\n", total, average);

For a full list of formatting codes see Table B-1 in KernighanRitchie, or run man 3 printf.

The inverse of printf is scanf. The scanf function reads formatted data from stdin according to the format string passed as its first argument and stuffs the results into variables whose addresses are given by the later arguments. This requires prefixing each such argument with the & operator, which takes the address of a variable.

Format strings for scanf are close enough to format strings for printf that you can usually copy them over directly. However, because scanf arguments don't go through argument promotion (where all small integer types are converted to int and floats are converted to double), you have to be much more careful about specifying the type of the argument correctly.

1 scanf("%c", &c); /* like c = getchar(); c must be a char */

2 scanf("%d", &n); /* read an int formatted in decimal */

3 scanf("%u", &n); /* read an unsigned int formatted in decimal */

4 scanf("%o", &n); /* read an unsigned int formatted in octal */

5 scanf("%x", &n); /* read an unsigned int formatted in hexadecimal */

6 scanf("%f", &x); /* read a float */

7 scanf("%lf", &x); /* read a double */

8

9 /* read total (an int) and average (a float) on two lines with labels */

10 /* (will also work if input is missing newlines or uses other whitespace, see below) */

11 scanf("Total: %d\nAverage: %f\n", &total, &average);

The scanf routine eats whitespace (spaces, tabs, newlines, etc.) in its input whenever it sees a conversion specification or a whitespace character in its format string. Non-whitespace characters that are not part of conversion specifications must match exactly. To detect if scanf parsed everything successfully, look at its return value; it returns the number of values it filled in, or EOF if it hits end-of-file before filling in any values.

The printf and scanf routines are wrappers for fprintf and fscanf, which take a stream as their first argument, e.g.:

1 fprintf(stderr, "BUILDING ON FIRE, %d%% BURNT!!!\n", percentage);

Note the use of "%%" to print a single percent in the output.

27. Rolling your own I/O routines

Since we can write our own functions in C, if we don't like what the standard routines do, we can build our own on top of them. For example, here's a function that reads in integer values without leading minus signs and returns the result. It uses the peekchar routine we defined above, as well as the isdigit routine declared in ctype.h.

1 /* read an integer written in decimal notation from stdin until the first

2 * non-digit and return it. Returns 0 if there are no digits. */

3 int

4 readNumber(void)

5 {

6 int accumulator; /* the number so far */

7 int c; /* next character */

8

9 accumulator = 0;

10

11 while((c = peekchar()) != EOF && isdigit(c)) {

12 c = getchar(); /* consume it */

13 accumulator *= 10; /* shift previous digits over */

14 accumulator += (c - '0'); /* add decimal value of new digit */

15 }

16

17 return accumulator;

18 }

Here's another implementation that does almost the same thing:

The difference is that readNumber2 will consume any whitespace before the first digit, which may or may not be what we want.

More complex routines can be used to parse more complex input. For example, here's a routine that uses readNumber to parse simple arithmetic expressions, where each expression is either a number or of the form (expression+expression) or (expression*expression). The return value is the value of the expression after adding together or multiplying all of its subexpressions. (A complete program including this routine and the others defined earlier that it uses can be found in calc.c.)

1 #define EXPRESSION_ERROR (-1)

2

3 /* read an expression from stdin and return its value */

4 /* returns EXPRESSION_ERROR on error */

5 int

6 readExpression(void)

7 {

8 int e1; /* value of first sub-expression */

9 int e2; /* value of second sub-expression */

10 int c;

11 int op; /* operation: '+' or '*' */

12

13 c = peekchar();

14

15 if(c == '(') {

16 c = getchar();

17

18 e1 = readExpression();

19 op = getchar();

20 e2 = readExpression();

21

22 c = getchar(); /* this had better be ')' */

23 if(c != ')') return EXPRESSION_ERROR;

24

25 /* else */

26 switch(op) {

27 case '*':

28 return e1*e2;

29 break;

30 case '+':

31 return e1+e2;

32 break;

33 default:

34 return EXPRESSION_ERROR;

35 break;

36 }

37 } else if(isdigit(c)) {

38 return readNumber();

39 } else {

40 return EXPRESSION_ERROR;

41 }

42 }

Because this routine calls itself recursively as it works its way down through the input, it is an example of a recursive descent parser. Parsers for more complicated languages (e.g. C) are usually not written by hand like this, but are instead constructed mechanically using a Parser generator.

28. File I/O

Reading and writing files is done by creating new streams attached to the files. The function that does this is fopen. It takes two arguments: a filename, and a flag that controls whether the file is opened for reading or writing. The return value of fopen has type FILE * and can be used in putc, getc, fprintf, etc. just like stdin, stdout, or stderr. When you are done using a stream, you should close it using fclose.

Here's a program that reads a list of numbers from a file whose name is given as argv[1] and prints their sum:

1 #include <stdio.h>

2 #include <stdlib.h>

3

4 int

5 main(int argc, char **argv)

6 {

7 FILE *f;

8 int x;

9 int sum;

10

11 if(argc < 2) {

12 fprintf(stderr, "Usage: %s filename\n", argv[0]);

13 exit(1);

14 }

15

16 f = fopen(argv[1], "r");

17 if(f == 0) {

18 /* perror is a standard C library routine */

19 /* that prints a message about the last failed library routine */

20 /* prepended by its argument */

21 perror(filename);

22 exit(2);

23 }

24

25 /* else everything is ok */

26 sum = 0;

27 while(fscanf("%d", &x) == 1) {

28 sum += x;

29 }

30

31 printf("%d\n", sum);

32

33 /* not strictly necessary but it's polite */

34 fclose(f);

35

36 return 0;

37 }

To write to a file, open it with fopen(filename, "w"). Note that as soon as you call fopen with the "w" flag, any previous contents of the file are erased. If you want to append to the end of an existing file, use "a" instead. You can also add + onto the flag if you want to read and write the same file (this will probably involve using fseek).

Some operating systems (Windows) make a distinction between text and binary files. For text files, use the same arguments as above. For binary files, add a b, e.g. fopen(filename, "wb") to write a binary file.

1 /* leave a greeting in the current directory */

2

3 #include <stdio.h>

4 #include <stdlib.h>

5

6 #define FILENAME "hello.txt"

7 #define MESSAGE "hello world"

8

9 int

10 main(int argc, char **argv)

11 {

12 FILE *f;

13

14 f = fopen(FILENAME, "w");

15 if(f == 0) {

16 perror(FILENAME);

17 exit(1);

18 }

19

20 /* unlike puts, fputs doesn't add a newline */

21 fputs(MESSAGE, f);

22 putc('\n', f);

23

24 fclose(f);

25

26 return 0;

27 }

29. C/Pointers

Contents

30. Memory and addresses

Memory in a typical modern computer is divided into two classes: a small number of registers, which live on the CPU chip and perform specialized functions like keeping track of the location of the next machine code instruction to execute or the current stack frame, and main memory, which (mostly) lives outside the CPU chip and which stores the code and data of a running program. When the CPU wants to fetch a value from a particular location in main memory, it must supply an address: a 32-bit or 64-bit unsigned integer on typical current architectures, referring to one of up to 232 or 264 distinct 8-bit locations in the memory. These integers can be manipulated like any other integer; in C, they appear as pointers, a family of types that can be passed as arguments, stored in variables, returned from functions, etc.

31. Pointer variables

31.1. Declaring a pointer variable

The convention is C is that the declaration of a complex type looks like its use. To declare a pointer-valued variable, write a declaration for the thing that it points to, but include a * before the variable name:

31.2. Assigning to pointer variables

Declaring a pointer-valued variable allocates space to hold the pointer but not to hold anything it points to. Like any other variable in C, a pointer-valued variable will initially contain garbage---in this case, the address of a location that might or might not contain something important. To initialize a pointer variable, you have to assign to it the address of something that already exists. Typically this is done using the & (address-of) operator:

31.3. Using a pointer

Pointer variables can be used in two ways: to get their value (a pointer), e.g. if you want to assign an address to more than one pointer variable:

But more often you will want to work on the value stored at the location pointed to. You can do this by using the * (dereference) operator, which acts as an inverse of the address-of operator:

The * operator binds very tightly, so you can usually use *p anywhere you could use the variable it points to without worrying about parentheses. However, a few operators, such as --, ++, and . (used in C/Structs) bind tighter, requiring parantheses if you want the * to take precedence.

31.4. Printing pointers

You can print a pointer value using printf with the %p format specifier. To do so, you should convert the pointer to type void * first using a cast (see below for void * pointers), although on machines that don't have different representations for different pointer types, this may not be necessary.

Here is a short program that prints out some pointer values:

1 #include <stdio.h>

2 #include <stdlib.h>

3

4 int G = 0; /* a global variable, stored in BSS segment */

5

6 int

7 main(int argc, char **argv)

8 {

9 static int s; /* static local variable, stored in BSS segment */

10 int a; /* automatic variable, stored on stack */

11 int *p; /* pointer variable for malloc below */

12

13 /* obtain a block big enough for one int from the heap */

14 p = malloc(sizeof(int));

15

16 printf("&G = %p\n", (void *) &G);

17 printf("&s = %p\n", (void *) &s);

18 printf("&a = %p\n", (void *) &a);

19 printf("&p = %p\n", (void *) &p);

20 printf("p = %p\n", (void *) p);

21 printf("main = %p\n", (void *) main);

22

23 free(p);

24

25 return 0;

26 }

When I run this on a Mac OS X 10.6 machine after compiling with gcc, the output is:

&G = 0x100001078 &s = 0x10000107c &a = 0x7fff5fbff2bc &p = 0x7fff5fbff2b0 p = 0x100100080 main = 0x100000e18

The interesting thing here is that we can see how the compiler chooses to allocate space for variables based on their storage classes. The global variable G and the static local variable s both persist between function calls, so they get placed in the BSS segment (see .bss) that starts somewhere around 0x100000000, typically after the code segment containing the actual code of the program. Local variables a and p are allocated on the stack, which grows down from somewhere near the top of the address space. The block that malloc returns that p points to is allocated off the heap, a region of memory that may also grow over time and starts after the BSS segment. Finally, main appears at 0x100000e18; this is in the code segment, which is a bit lower in memory than all the global variables.

32. The null pointer

The special value 0, known as the null pointer may be assigned to a pointer of any type. It may or may not be represented by the actual address 0, but it will act like 0 in all contexts (e.g., it has the value false in an if or while statement). Null pointers are often used to indicate missing data or failed functions. Attempting to dereference a null pointer can have catastrophic effects, so it's important to be aware of when you might be supplied with one.

33. Pointers and functions

A simple application of pointers is to get around C's limit on having only one return value from a function. Because C arguments are copied, assigning a value to an argument inside a function has no effect on the outside. So the doubler function below doesn't do much:

However, if instead of passing the value of y into doubler we pass a pointer to y, then the doubler function can reach out of its own stack frame to manipulate y itself:

Generally, if you pass the value of a variable into a function (with no &), you can be assured that the function can't modify your original variable. When you pass a pointer, you should assume that the function can and will change the variable's value. If you want to write a function that takes a pointer argument but promises not to modify the target of the pointer, use const, like this:

The const qualifier tells the compiler that the target of the pointer shouldn't be modified. This will cause it to return an error if you try to assign to it anyway:

Passing const pointers is mostly used when passing large structures to functions, where copying a 32-bit pointer is cheaper than copying the thing it points to.

If you really want to modify the target anyway, C lets you "cast away const":

There is usually no good reason to do this; the one exception might be if the target of the pointer represents an AbstractDataType, and you want to modify its representation during some operation to optimize things somehow in a way that will not be visible outside the abstraction barrier, making it appear to leave the target constant.

Note that while it is safe to pass pointers down into functions, it is very dangerous to pass pointers up. The reason is that the space used to hold any local variable of the function will be reclaimed when the function exits, but the pointer will still point to the same location, even though something else may now be stored there. So this function is very dangerous:

An exception is when you can guarantee that the location pointed to will survive even after the function exits, e.g. when the location is dynamically allocated using malloc (see below) or when the local variable is declared static:

Usually returning a pointer to a static local variable is not good practice, since the point of making a variable local is to keep outsiders from getting at it. If you find yourself tempted to do this, a better approach is to allocate a new block using malloc (see below) and return a pointer to that. The downside of the malloc method is that the caller has to promise to call free on the block later, or you will get a storage leak.

34. Pointer arithmetic and arrays

Because pointers are just numerical values, one can do arithmetic on them. Specifically, it is permitted to

Add an integer to a pointer or subtract an integer from a pointer. The effect of p+n where p is a pointer and n is an integer is to compute the address equal to p plus n times the size of whatever p points to (this is why int * pointers and char * pointers aren't the same).

Subtract one pointer from another. The two pointers must have the same type (e.g. both int * or both char *). The result is an integer value, equal to the numerical difference between the addresses divided by the size of the objects pointed to.

Compare two pointers using ==, !=, <, >, <=, or >=.

Increment or decrement a pointer using ++ or --.

The main application of pointer arithmetic in C is in arrays. An array is a block of memory that holds one or more objects of a given type. It is declared by giving the type of object the array holds followed by the array name and the size in square brackets:

Declaring an array allocates enough space to hold the specified number of objects (e.g. 200 bytes for a above and 400 for cp---note that a char * is an address, so it is much bigger than a char). The number inside the square brackets must be a constant whose value can be determined at compile time.

The array name acts like a constant pointer to the zeroth element of the array. It is thus possible to set or read the zeroth element using *a. But because the array name is constant, you can't assign to it:

More common is to use square brackets to refer to a particular element of the array. The expression a[n] is defined to be equivalent to *(a+n); the index n (an integer) is added to the base of the array (a pointer), to get to the location of the n-th element of a. The implicit * then dereferences this location so that you can read its value (in a normal expression) or assign to it (on the left-hand side of an assignment operator). The effect is to allow you to use a[n] just as you would any other variable of type int (or whatever type a was declared as).

Note that C doesn't do any sort of bounds checking. Given the declaration int a[50];, only indices from a[0] to a[49] can be used safely. However, the compiler will not blink at a[-12] or a[10000]. If you read from such a location you will get garbage data; if you write to it, you will overwrite god-knows-what, possibly trashing some other variable somewhere else in your program or some critical part of the stack (like the location to jump to when you return from a function). It is up to you as a programmer to avoid such buffer overruns, which can lead to very mysterious (and in the case of code that gets input from a network, security-damaging) bugs. The valgrind program can help detect such overruns in some cases (see C/valgrind).

Another curious feature of the definition of a[n] as identical to *(a+n) is that it doesn't actually matter which of the array name or the index goes inside the braces. So all of a[0], *a, and 0[a] refer to the zeroth entry in a. Unless you are deliberately trying to obfuscate your code, it's best to write what you mean.

34.1. Arrays and functions

Because array names act like pointers, they can be passed into functions that expect pointers as their arguments. For example, here is a function that computes the sum of all the values in an array a of size n:

Note the use of const to promise that sumArray won't modify the contents of a.

Another way to write the function header is to declare a as an array of unknown size:

This has exactly the same meaning to the compiler as the previous definition. Even though normally the declarations int a[10] and int *a mean very different things (the first one allocates space to hold 10 ints, and prevents assigning a new value to a), in a function argument int a[] is just SyntacticSugar for int *a. You can even modify what a points to inside sumArray by assigning to it. This will allow you to do things that you usually don't want to do, like write this hideous routine:

34.2. Multidimensional arrays

Arrays can themselves be members of arrays. The result is a multidimensional array, where a value in row i and column j is accessed by a[i][j].

Declaration is similar to one-dimensional arrays:

1 int a[6][4]; /* declares an array of 6 rows of 4 ints each */

This declaration produces an array of 24 int values, packed contiguously in memory. The interpretation is that a is an array of 6 objects, each of which is an array of 4 ints.

If we imagine the array to contain increasing values like this:

0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17

the actual positions in memory will look like this:

0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 ^ ^ ^ a[0] a[1] a[2]

To look up a value, we do the usual array-indexing magic. Suppose we want to find a[1][4]. The name a acts as a pointer to the base of the array.The name a[1] says to skip ahead 1 times the size of the things pointed to by a, which are arrays of 6 ints each, for a total size of 24 bytes assuming 4-byte ints. For a[1][4], we start at a[1] and move forward 4 times the size of the thing pointed to by a[1], which is an int; this puts us 24+16 bytes from a, the position of 10 in the picture above.